- Blog , Artificial Intelligence

- Published on: 21.11.2024

- 13:15 mins

AI-Driven Process Optimization: Maximizing Productivity with Artificial Intelligence

Artificial Intelligence is a transformative technology that is reshaping industries worldwide, creating significant pressure for businesses to adapt and innovate. Thousands of companies face the challenge of swiftly and efficiently integrating the rapidly evolving AI advancements into value-driven applications. However, the effort is worthwhile: with AI services, you can automate workflows, optimize manufacturing processes, and consequently enhance overall process efficiency while achieving cost savings. A high degree of automation is a crucial prerequisite for the successful integration of AI services.

The goal is clear: companies must implement secure, value-adding, and sustainable AI services to keep pace with the competition and avoid being left behind. This requires a well-coordinated AI service infrastructure, even when integrating pre-existing models or APIs. Without a suitable infrastructure and a corresponding development approach, the use of AI technology is likely to fail.

With a comprehensive consulting approach and years of expertise in implementation, MHP offers tailored solutions for your company, providing guidance and support every step of the way. This blog post explores how MHP can help your business navigate the challenges of AI scaling and fully capitalize on the opportunities it presents.

AI in Business: Challenges in Scaling AI Services

Integrating and scaling Artificial Intelligence within a company offers tremendous opportunities, but it also poses significant challenges. Many companies struggle to scale their AI services efficiently and effectively to maximize the benefits of these technologies. Often, the lack of or faulty scaling can be traced back to individual or multiple sub-problems being inadequately or incorrectly addressed. Below, we delve deeper into the most significant pain points:

Poor data quality

One of the biggest challenges lies in ensuring high-quality and consistent data. AI models are only as good as the data they are trained on, making data quality a crucial factor. Additionally, it is essential to structure the large volume of required data efficiently and store it for further processing, so that the development of AI services is not hindered by poor data availability. Companies should therefore invest in robust data management strategies and provide high-quality data to guarantee it´s quality, consistency, and availability. This is vital for effective AI process optimization and to enhance overall productivity through AI.

Faulty system design

A common barrier to implementing AI services is inadequate or flawed system design. For AI services to be successfully integrated, the system design must not only meet the specific requirements of the AI model but also align with the nature of the problem being addressed. This means the system needs to incorporate the appropriate algorithm and necessary resources to tackle the challenge efficiently. A robust and scalable system design must be flexible enough to adapt to changes in data flow and model requirements, while ensuring a smooth and sustainable integration. Only with such a well-constructed system can AI services be effectively operated and optimized in the long term, driving AI process optimization and ultimately leading to AI productivity increases.

Dynamic AI models

In a dynamic system with constantly changing environmental conditions, the data being processed evolves continuously, requiring real-time adjustments to AI models. To maintain efficiency and ensure consistent, high-quality outputs, it is crucial to automate these adjustments and subject them to rigorous quality checks throughout the entire lifecycle of the AI service. This approach not only supports ongoing optimization of AI processes but also boosts productivity and drives sustained increases in long-term AI performance. By continuously fine-tuning AI models, companies can achieve enhanced AI process optimization and long-term productivity improvements.

High development speed of AI services

Not only do environmental conditions and data change, but AI services themselves also evolve rapidly. The leaps in innovation within the field of Artificial Intelligence remain substantial, requiring companies to ensure they can keep pace with the rapid development of AI applications to continuously benefit from the latest technologies. Failing to do so will quickly cause your company to fall behind competitors, resulting in a significantly weaker market position.

Differences in challenges and tasks for AI and GenAI

Classical AI: Requires extensive resources for training models. The main focus is on developing and training models, as well as their continuous optimization and versioning.

Generative AI (GenAI): Streamlines the traditional AI workflow by relying heavily on pre-trained models, significantly reducing the need for extensive training. The emphasis shifts to optimizing the surrounding processes to maximize efficiency. Choosing the right model is crucial, ensuring it aligns with the specific task whether by text processing, image analysis, or time series prediction. Alongside functional suitability, cost efficiency plays a pivotal role. Companies must closely monitor operational costs, including computational power and storage requirements, to maintain both the effectiveness and cost-efficiency of their GenAI solutions.

MHP’s Solution Approach for Effective Scaling of AI Services

The effective scaling of AI services requires a strategic approach that optimally integrates technology, people, and processes. MHP offers a comprehensive solution approach based on years of experience and a clear focus on scalability and efficiency.

AI engineering as a holistic approach for optimal scaling

AI engineering plays a central role in the development, implementation, and maintenance of AI systems. By combining software engineering, data science, system architecture, and MLOps, the goal is to create scalable, reliable, and efficient AI solutions.

The core aspects of MLOps are:

Automation: MLOps automates the entire lifecycle of machine learning models, from data collection and preprocessing to training, validation, deployment, and monitoring. Continuous integration and deployment (CI/CD) ensure seamless and efficient model development.

Collaboration: Cross-functional teams enable interdisciplinary collaboration between data scientists, data engineers, DevOps, and other stakeholders. This ensures that all parties are aligned and can collaborate efficiently to improve the quality and speed of model development.

Scalability: MLOps ensures that ML systems can handle large data volumes and high request loads. This is crucial for scaling and delivering robust and powerful models in production environments.

Security and compliance: Implementing security measures and complying with regulations is an integral part of MLOps. This includes protecting sensitive data, ensuring data integrity, and adhering to privacy regulations to ensure the secure and compliant operation of models.

Monitoring and management: Continuous monitoring and versioning of models are essential to ensure their performance and functionality. This includes monitoring model metrics, managing model versions, and implementing error detection and correction mechanisms.

System operation: MLOps also encompasses the operation and maintenance of ML models and platforms. This involves managing resources, optimizing system performance, and ensuring the availability and reliability of ML services during their application.

The key goals of AI engineering are:

- Creating scalable and efficient AI solutions: By combining various disciplines, it ensures that AI applications are robust and powerful.

- Effective management of AI models: From conception through development to production, the entire lifecycle of the models is covered.

- Continuous improvement and operation of AI applications: Regular updates, monitoring, and optimizations ensure that the models remain up to date and functional.

AI engineering spans the development cycle with several interdependent steps across different operational environments. During the proof-of-concept phase, the basic functionality of the model is tested in a locally confined environment using test data. If successful, the model proceeds to a pilot phase, where it is tested as a Minimum Viable Product (MVP) with increasing maturity across various development, testing, and real-world environments. If this test goes as planned, the release phase follows, where the model is deployed in the production environment with full functionality and seamless integration into the operational system.

The approach based on AI engineering is independent of the AI model, making it easily integrable into a standardized solution landscape. At the same time, it still allows for customized and client-specific approaches to ensure optimal customer benefit.

Cross-functional team approach (ONE Team)

MHP relies on a cross-functional team approach, known as the ONE Team.

This team consists of:

- AI product manager: Integrates data requirements and prioritizes product features, acting as the interface between the client and the development team.

- Data scientist: Conducts experiments and develops and selects models.

- Data (infrastructure) engineer: Builds the data infrastructure.

- AI engineer: Oversees the transfer, scaling, integration, monitoring, and troubleshooting of the ML model.

- Software/frontend engineer: Processes the interface in a frontend/dashboard/application, depending on the AI use case.

The advantages of MHP's ONE Team are manifold and contribute significantly to the successful scaling of AI services. Through interdisciplinary collaboration, where various areas of expertise are combined within a single team, innovative and effective solutions are developed. The decentralization of expertise means that experts for different areas are distributed across the organization, each responsible for specific AI products. At the same time, there is centralization of the product teams, with a central team, the Centre of Excellence, uniting all skills and roles to develop AI products for the entire organization. Each of our roles has specific expertise in its area as well as a broad competence profile. This allows us to keep track of all relevant interfaces and effectively manage them.

Holistic approach for optimal scaling through AIDevOps@MHP with CRISP-AI framework

At MHP, we rely on our self-developed framework AIDevOps@MHP as the foundation for rapid and targeted implementation.

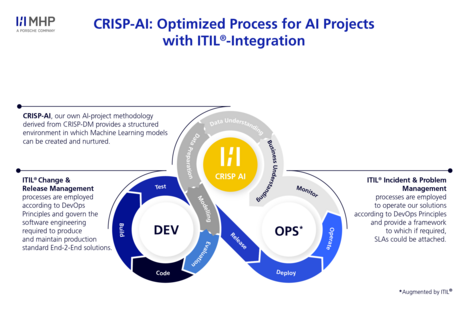

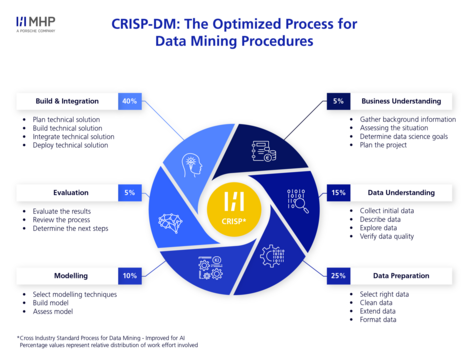

AIDevOps@MHP is based on CRISP-AI, which in turn is derived from CRISP-DM (Cross Industry Standard Process for Data Mining) and extended by Deploy-and-Run aspects. CRISP-DM is a standardized framework for data mining processes, describing activities over six phases, from understanding the current state to data preparation, modeling, evaluation, and integration.

The AIDevOps@MHP framework goes several steps beyond traditional MLOps by combining CRISP-AI approaches with DevOps principles and ITIL (IT Infrastructure Library) processes.

The application of DevOps principles enables fast product deployment and reduces architectural complexity. It also promotes an improved work culture within the teams. These principles are particularly suitable for exploratory projects in data science, as they offer flexibility and efficiency in the development and implementation of solutions. Additionally, the integration of ITIL processes ensures that the necessary phases for the development of a product or service are properly followed.

Through the intelligent integration of these three domains, the advantages over a pure MLOps approach are clear:

- Integration of software development and AI: AIDevOps@MHP combines DevOps principles with the requirements of AI and ML, extending the automation and management of ML models to the development of AI-based applications, and fosters collaboration between data scientists, developers, and IT teams.

- End-to-end solution approach: Unlike MLOps, which focuses on model deployment and maintenance, AIDevOps@MHP covers the entire lifecycle of an AI solution, from development to optimization, and dynamically adapts to business requirements.

- Scalability and flexibility: AIDevOps@MHP offers an infrastructure optimized for AI-driven applications, allowing for flexible and efficient scaling through the combination of DevOps and ML, thereby increasing the adaptability of AI services.

- Automation and continuous delivery: AIDevOps@MHP goes beyond model automation in MLOps, emphasizing continuous integration and delivery (CI/CD) of AI applications, enabling faster time-to-market and greater flexibility.

- Improved governance and compliance: Compared to MLOps, AIDevOps@MHP provides enhanced governance and compliance features, ensuring that AI applications adhere to legal and ethical standards, with comprehensive documentation of any changes.

The use of AIDevOps@MHP enables continuous monitoring, incident management, and troubleshooting at every process step, helping you maintain and regularly review the quality of your code, AI models, and data. Additionally, your company has the opportunity to integrate ethical considerations into the entire lifecycle of AI applications, alongside purely economic and rational aspects. This includes incorporating transparency, fairness, accountability, privacy, security, and reliability. Through the automation of processes combined with ethical compliance, it is ensured that ethical standards are reviewed and upheld.

AI Process Optimization: Effective Scaling of AI Services with MHP

Scaling AI services presents a variety of challenges for your company, but with a targeted and comprehensive approach, these can be overcome. Whether it’s poor data quality, flawed system design, changing frameworks and data, or the fast-paced innovations in AI – through the use of AIDevOps@MHP, you can master the complexities of AI development and create sustainable, value-generating solutions.

With full compatibility with existing systems and the ability to make individual and custom adjustments, AIDevOps@MHP is the right approach to enhance your productivity and efficiency through automated processes and minimal maintenance effort. A high level of automation ensures that AI services can be operated reliably and efficiently, giving your company a competitive edge in terms of market position and customer retention.

With our extensive expertise, we support and guide you in implementing and executing AI services. Using a cross-functional team approach and tailored solutions, MHP helps your company tackle the challenges of AI scaling and leverage the opportunities to the fullest. Where exactly do your challenges lie, and how can we help you solve them? Contact us and get personal advice from our experts to find the right solution for your company.

FAQ

AI-driven process optimization significantly enhances your company’s efficiency by automating routine tasks and reducing human errors. With AI, large volumes of data can be analyzed quickly and accurately, leading to better and more informed decision-making. The high level of automation in AI services ensures continuous and reliable operations with minimal maintenance effort. Additionally, a well-implemented AI service infrastructure can help optimize business processes and increase your company’s competitiveness.

Companies must implement robust data management strategies, which include continuous monitoring and cleaning of data to identify and correct errors and inconsistencies early on. A solid system design, which is both flexible and scalable, ensures that the specific requirements of AI are met. Automation also plays a key role by streamlining processes and minimizing human errors. Additionally, companies should ensure that their data is accessible, interoperable, and compliant with privacy regulations to ensure sustainable use and integration of AI services.

AIDevOps@MHP differs from traditional DevOps practices in its special focus on AI services. While traditional DevOps practices aim at the rapid deployment and integration of software, AIDevOps@MHP combines the principles of CRISP-DM, DevOps, and ITIL to ensure seamless development and implementation of AI solutions. This framework places a strong emphasis on the automation and optimization of the entire AI lifecycle, from data collection to model deployment and continuous monitoring. It also integrates ethical considerations such as transparency, fairness, and data privacy throughout the process. Through this holistic and interdisciplinary approach, AIDevOps@MHP delivers customized and sustainable AI solutions specifically tailored to the challenges and needs of modern businesses.

To ensure AI solutions integrate seamlessly into existing IT systems, a clear definition of the software architecture is crucial. This architecture must meet the specific requirements of AI technology while remaining flexible and scalable. A high degree of automation plays a key role in making processes efficient and in identifying and addressing potential issues early. Additionally, AI solutions must be interoperable and compliant with data protection regulations to ensure smooth integration. By leveraging a cross-functional team that combines diverse expertise, MHP can develop customized solutions tailored to your unique needs and challenges.

MHP supports companies in implementing and scaling AI services through a holistic approach that effectively integrates technology, people, and processes. Agile teams composed of software engineers, data scientists, and AI product managers work purposefully on customized solutions. By incorporating machine learning, computer vision, and natural language processing (NLP), MHP creates significant added value. MLOps and AI engineering ensure the automation and optimization of the entire AI lifecycle, leading to efficient and scalable AI solutions. This comprehensive approach ensures that AI services are not only technically flawless but also commercially valuable.

The biggest difference between AI and GenAI projects lies in their approach and focus. Traditional AI requires significant resources for training models, with an emphasis on development, training, and continuous process optimization. In contrast, GenAI largely eliminates the need for training by often utilizing pre-trained models. The focus of GenAI shifts to optimizing surrounding processes, allowing for a leaner implementation but requiring careful customization. In GenAI projects, the emphasis is more on AI engineering rather than data science, as more resources are needed for the data preparation process.