- Blog, Artificial Intelligence

- Published on: 18.07.2024

- 11:26 mins

Safe AI: Establishing a Successful Security Strategy

AI systems are becoming increasingly vital across various industries, with companies of all sizes leveraging the technology to optimize processes, accelerate decision-making, and drive innovation. However, as these technologies become more integrated, the risk of cyber-attacks and other threats also increases. These threats not only jeopardize IT security but can also impact businesses' reputations and financial stability.

For a secure and trustworthy AI system, it is essential to tailor your cybersecurity strategy to the specific challenges posed by generative AI systems. The issue lies in the lack of established best practices to make informed and calculated decisions regarding the defense of AI applications. Additionally, the new EU AI Act introduces further complexities and uncertainties. Thus, businesses are facing a dual challenge: They need to design their AI systems to be both powerful and efficient, as well as secure.

The solution lies in establishing a holistic security strategy ensured through the integration of Trust, Risk, and Security Management (TRiSM) into your AI concept. With professional consultation from experienced specialists and the appropriate technological foundations, businesses can successfully tackle this complex challenge. To understand precisely what this entails, why and where generative artificial intelligence is susceptible to risks, and how MHP supports you in implementing secure systems, you'll find more in this article.

Safe AI: Concerns Regarding the Security of Generative Artificial Intelligence

Generative AI systems are valuable resources that can provide you with a competitive advantage, provided you invest in their efficiency and security. These systems typically handle large amounts of data, much of which is sensitive data, making them potential targets for cyber-attacks. New technologies continually introduce new attack vectors, as generative AI security aspects are often inadequately addressed due to rapid development cycles. In a world where security and trust are crucial, these concerns raise serious issues.

At the same time, generative AI applications – especially in their current state of security technology and breadth – have not been in practical use for long enough. In the field of generative AI security, there is a lack of established best practices and standardizations. Many users are unaware of the risks and often neglect additional security measures, especially with AI-powered systems that are already inadequately protected. This not only jeopardizes the applications themselves but also the IT infrastructures they are integrated into and the data stored within them. Speaking of data: The foundational dataset upon which every generative AI model relies can also pose a security problem. For instance, criminals can manipulate the data, leading to flawed models that produce inaccurate results. This undermines the reliability and transparency essential for the responsible deployment of generative AI applications. Another crucial point is the potential damage to reputation caused by malfunctioning AI-enabled models. There have been cases where chatbots, for example, have insulted customers, resulting in significant damage to brand image. Such incidents highlight the necessity for robust security measures and thorough testing.

Furthermore, the high degree of customization in many AI systems necessitates tailored security solutions, which further complicates implementation and introduces additional risks.

New Types of Attacks on AI Systems

Input attacks: Input attacks can cause a malfunction of an AI system due to adversarial data.

Data poisoning: Data poisoning involves creating a flawed model by introducing adversarial data into the training dataset.

Inference attacks: Inference attacks allow for determining whether a data record belongs to the training dataset or obtaining missing attributes from partially known data records.

Extraction attacks: Extraction attacks aim to extract the structure of a target model and create a model that closely approximates it.

Integration of AI TRiSM into the AI Strategy

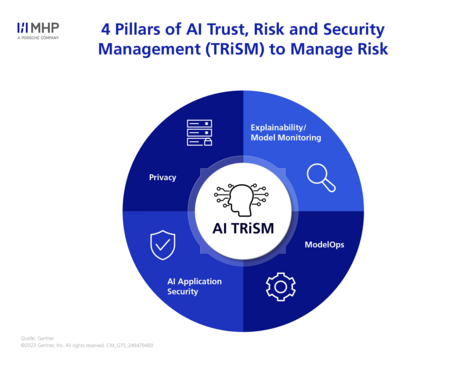

AI TRiSM (Trust, Risk, and Security Management) is a comprehensive approach conceived by Gartner. AI TRiSM aims to strengthen trust in AI systems, manage risks, and ensure the security of these systems. This approach should be considered in the development and implementation of all AI models, regardless of their risk classification. AI TRiSM is based on four central pillars:

- Explainability and model monitoring: Companies must ensure that the functionality of their models is understandable and continuously monitored. This increases user trust and enables better identification and correction of errors.

- ModelOps: ModelOps refers to the efficient and secure management of AI models throughout their entire lifecycle. It involves rapidly and securely deploying models into production and continuously optimizing their performance. This includes automating processes and integrating security measures.

- AI Application security: Companies need to implement robust security mechanisms to protect their systems against threats such as data manipulation, phishing, and malware. Regular security checks and updates are essential in this regard.

- Data Privacy: Respecting users' privacy and securely storing and processing data are crucial. Therefore, companies are obligated to comply with legal regulations and implement data privacy technologies.

EU AI Act: Regulatory Aspects of Safe AI

Another critical point concerning many AI users is compliance with new regulations such as the EU AI Act.

The EU AI Act is an EU law on artificial intelligence aimed at monitoring and regulating the deployment and development of artificial intelligence within the European Union.

This law is particularly important as it sets clear and binding rules for the safe, responsible, and ethical use of generative AI technologies. The EU AI Act classifies AI systems into different categories based on the risks they pose. Systems classified as high-risk are subject to stricter requirements regarding transparency, data quality, and monitoring.

The goal is to enhance trust in these technologies by ensuring they do not endanger people's rights and freedoms. A core focus of the law is the protection of fundamental rights, which includes measures against discrimination, safeguarding privacy, and ensuring data security.

EU AI Act: A Challenge for Many Companies

For businesses, the introduction of this law means they must carefully assess and potentially adjust their AI systems. This often involves additional investments in security measures. However, many companies are uncertain about how they can meet the stringent requirements of the law – especially concerning data privacy, algorithm transparency, and the traceability of AI decisions.

These aspects are crucial for ensuring compliance with the EU AI Act. However, they also pose significant challenges. As a result, companies may hesitate to introduce new technologies or retrofit existing ones.

However, Gartner forecasts that by 2026, companies prioritizing transparency, trust, and security in their AI applications will see a 50% increase in user acceptance, goal achievement, and overall adoption of their AI systems. This indicates that the EU AI Act not only presents challenges but also opportunities. Integrating security, risk management, and trust into AI strategies helps meet legal requirements while promoting user trust and acceptance. Furthermore, businesses benefit from these innovations by developing more robust, secure, and trustworthy AI systems.

How MHP Can Support You With Your AI Security Strategy

To deploy generative AI applications successfully and responsibly under the framework of safe AI, there are several points and factors to consider – a task that is nearly impossible for an average company using AI systems. Achieving truly effective, secure, and EU AI Act-compliant implementation requires deep expertise, which few companies can afford in the complex field of AI. External support from professional consultants is therefore essential to ensure a secure and responsible deployment of AI systems.

As a management and IT consulting firm with specialized experience in AI and safe AI, MHP can assist your company in establishing a robust and reliable AI security strategy aligned with AI TRiSM. Our collaboration would include the following steps:

Holistic Security and Efficiency through Extensive Expertise

Security begins with the training and deployment of generative AI systems. Therefore, leveraging its comprehensive expertise and experience in this field, MHP offers a structured approach that considers security aspects from the outset, enabling the swift implementation of technical solutions. This allows companies to integrate state-of-the-art security practices into the development and deployment processes of their applications, ensuring AI systems are designed securely from the outset. MHP takes into account both existing and new technology pipelines to comprehensively meet individual needs and specific security requirements.Analysis and Identification of Potential Threats through Threat Consulting

Many companies are unaware of the types of dangers and risks their AI systems face. Therefore, we focus on identifying and assessing potential security threats in your company's AI systems and IT infrastructures. Leveraging the comprehensive expertise of our specialists, we can effectively identify and address both external and inherent system risks. Additionally, MHP assists your company in developing and implementing recognized best practices and effective security measures – tailored to the individual needs of your business.We Support You in Meeting Various Compliance Requirements

The EU AI Act and similar regulations cause many companies headaches and worries. To alleviate at least this concern, MHP ensures that your AI systems comply with current legal requirements. For example, we integrate mechanisms that guarantee user data protection and privacy. This way, we help your company avoid legal consequences and foster user trust.

Here's How You Benefit from MHP's AI Expertise

MHP supports you in both the technical integration of AI TRiSM into your AI concept and in strategic planning and compliance with relevant legal frameworks. This holistic approach significantly helps your company navigate and succeed in the dynamic and complex landscape of AI security. Collaborating with MHP offers several significant advantages, such as:

- End-to-End Concepts: We also consider the big picture. Our consulting and support cover all steps on the path to safe AI – from analyzing your current security strategies to implementing specific measures to enhance the security of your AI application. Naturally, we also implement your AI system and are always available as a point of contact. How do you benefit from this holistic approach? You can be confident that we consider all aspects of AI security from start to finish. This comprehensive consideration is key to any well-protected IT solution.

- Improving Model Robustness: Adversarial training is a machine learning technique that makes AI models more robust against manipulative attacks by introducing targeted disturbances during the training process. We employ this method to better prepare your AI system for data attacks and manipulations. The result is a significant increase in the reliability of generative AI systems, even under targeted cyberattack conditions.

- Faster Time to Market: MHP integrates security measures into the development of generative AI systems right from the start. This helps shorten the real time to market for new products by identifying and addressing security issues early on. An earlier launch of safe AI can be crucial in providing your company with a competitive market advantage.

- Competitive Advantage in High-Risk Markets: In markets with stringent data protection and security requirements, robust, reliable, and secure AI systems are essential and can provide your company with a competitive edge. MHP supports you by developing AI systems that comply with legal regulations and meet user expectations in high-risk markets.

- Promoting Adoption and Business Goals: MHP provides not only technical but also strategic support for your company. We are committed to ensuring that your AI solutions are well-received by users while complying with all relevant legal regulations. This helps to increase the adoption rate of your AI systems and effectively achieve your business goals.

By partnering with an experienced firm like MHP, companies can successfully address both short-term security needs and long-term business and technological requirements. Our combination of versatile experience, expertise, and a uniquely comprehensive service offering makes us the ideal partner in the dynamic landscape of AI technologies for any company looking to leverage artificial intelligence. Contact us now to explore your opportunities.

FAQ

One of the biggest security risks for AI systems is manipulated data. This can lead to incorrect or undesired results, significantly compromising the integrity and reliability of AI decisions. Another significant risk is the theft of sensitive data. Since AI systems often have access to extensive and valuable datasets, they become attractive targets for such thefts. Additionally, vulnerabilities in the supporting software of AI systems are often the entry point for cyberattacks, further amplifying the security threat.

The EU AI Act classifies AI systems according to their risk potential and sets corresponding requirements. These range from basic transparency obligations to strict controls for high-risk applications. For companies, this means they must comprehensively document their AI systems, ensure data quality, and ensure compliance with ethical standards.

Companies should ensure that their systems comply with the requirements regarding data transparency, accuracy, and protection and that the decision-making processes of AI are understandable and explainable. It is also advisable to conduct regular reviews and updates of AI systems to ensure ongoing compliance and to proactively respond to changes in legislation.

To increase the security of their AI systems, companies should conduct a comprehensive security analysis to identify potential vulnerabilities and risks. This facilitates the implementation of protective measures such as data encryption and secure authentication methods. Furthermore, companies can (and should) leverage the expertise of external service providers to ensure the security of their AI solutions in line with safe AI principles.

The security of generative AI systems within the framework of safe AI also plays a crucial role in user acceptance, as secure systems enhance user trust. When users can trust that their data is protected and that the systems make reliable and fair decisions, they are more likely to adopt AI-based technologies. Conversely, security vulnerabilities can lead to distrust, significantly reducing willingness to use these technologies.