- Blog , Digital Transformation

- Published on: 30.01.2025

- 14:28 mins

EU AI Act: Key Aspects for Compliance with the Regulation

The increasing use of artificial intelligence (AI) has made establishing clear regulatory frameworks within the EU essential. These risks affect both businesses and citizens. Improper use of AI and the data it relies on can lead to data privacy breaches, discrimination, and biased decision-making. Additionally, security risks, such as manipulating or misusing AI systems, pose significant threats.

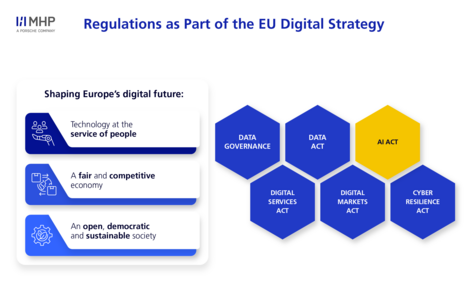

As part of the EU Digital Strategy, the EU AI Act represents a crucial milestone in regulating AI systems. The overarching goal of the EU regulations within this strategy is to position the EU as a leader in the digital industry. Key objectives include protecting users and operators through trustworthy technology, creating an EU-wide data marketplace, and safeguarding democratic principles in the digital society. The EU AI Act plays a fundamental role in this strategy, aiming to build widespread trust in AI technologies and minimize these risks. Starting in February 2025, businesses will need to meet initial requirements. Non-compliance could result in significant penalties, emphasizing the urgency of implementing these measures.

With the adoption of the EU AI Act, important regulatory guidelines for the use of AI will take effect. Companies utilizing AI must adapt their existing processes and technologies to align with the regulatory requirements of the EU AI Act. MHP supports you in integrating these requirements into your processes, systems, and governance structures.

What is the EU AI Act?

The EU AI Act establishes the world’s first binding legal framework for the use of artificial intelligence. Its goal is to ensure that AI systems developed, marketed, or used in the EU are safe, transparent, and ethically sound. The regulation applies not only to companies within the EU but also to any organization whose AI systems are used in the EU market.

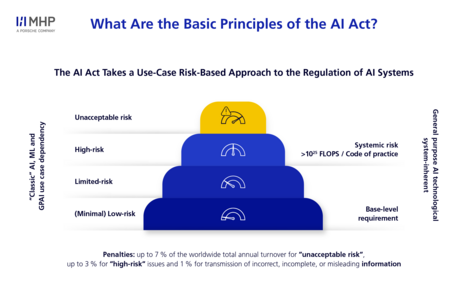

A key element of the regulation is its risk-based approach, which classifies AI systems into four categories: minimal, limited, high, and unacceptable risk. Depending on the risk level, companies must fulfill specific obligations and provide documentation. While minimal or limited-risk AI systems are subject to relatively low transparency and documentation requirements, high-risk systems face stringent regulations. Non-compliance can result in significant fines.

The European Commission proposed the regulation in 2021 and it officially came into force on August 1, 2024. However, its implementation will be phased in. AI systems deployed after August 1, 2024, will have to comply with all regulatory requirements by August 2, 2026. Those in operation before that date will be granted a longer transition period, with full compliance required by August 2, 2028.

The EU AI Act defines the term "AI system" in Article 3, based on the OECD’s definition:

“AI system": means a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments (…)”.

Who is Affected by the EU AI Act?

The EU AI regulation impacts a wide range of stakeholders:

- "Provider": An authority, organization, or individual who develops or commissions the development of a general-purpose AI system or model. This entity places the system on the market or puts it into operation under its own name or brand, regardless of whether this is done for profit or free of charge.

- "Deployer": Any natural or legal person, authority, organization, or entity that uses an AI system under their supervision, unless the AI system is used as part of personal, non-professional activities.

- "Distributor": Any natural or legal person in the supply chain who makes an AI system available on the EU market, excluding providers and importers.

- "Importer": Any natural or legal person based in the EU who introduces an AI system to the market under the name or brand of a person or entity located outside the EU.

- "Authorized representative": Any natural or legal person based in the EU who has received a written mandate from providers of an AI system or general-purpose AI model. These representatives accept the mandate to fulfill or implement the obligations and procedures specified in the regulation on behalf of the providers.

- "Product manufacturer": Manufacturers who market or operate an AI system in combination with their product under their own name or brand.

Categorization of AI Systems: Risk Classes

The core of the AI regulation lies in the risk assessment of deployed AI systems. Companies are required to conduct a risk evaluation for every AI system used within the European market, in line with the EU AI Act risk categories. AI systems developed exclusively for research purposes or as feasibility studies, and only operated within this scope, are not subject to the EU AI Act. The requirements for the different risk categories are outlined below:

Unacceptable risk

AI practices deemed by the legislators as a threat to the safety and rights of individuals within the EU are strictly prohibited. The EU AI Act explicitly bans systems that violate the values and rights of a liberal democratic order. Consequently, these systems cannot be used or marketed within the EU. Examples include:

- Real-time biometric systems for remote identification in publicly accessible spaces for law enforcement purposes (with a few exceptions).

- Algorithms for classifying individuals or groups based on their social behavior (social scoring).

- Manipulative systems that exploit the vulnerabilities of certain individuals to influence their behavior or use subconscious triggers to impact decision-making in ways that could cause physical or psychological harm (e.g., predictive policing).

High risk

High-risk systems have the potential to significantly impact the safety, health, and rights of individuals. As a result, these systems are subject to strict legal requirements regarding risk management, data quality, transparency, and robustness. Examples of high-risk systems include:

- Remote biometric identification and categorization systems of individuals (e.g., emotion recognition) based on sensitive or protected attributes, which may infer conclusions about these attributes and compromise privacy.

- Management and operation of critical infrastructures (e.g., as safety components in managing transportation networks or water supply systems), where malfunctions could have widespread consequences for the population.

- General and vocational education systems (e.g., access to or admission into institutions and the assessment of learning outcomes).

- AI systems used for recruitment or selection of individuals (e.g., job advertisements, application analysis and filtering) or for decisions affecting employment conditions, promotions, or terminations. These systems are particularly vulnerable to discrimination, and their decisions can have far-reaching consequences for individuals.

- Access to essential services (e.g., determining access to healthcare or housing services).

- Law enforcement applications (e.g., evaluating the reliability of evidence or profiling in criminal investigations).

- Border control management (e.g., assessing health or security risks of individuals entering the EU).

- Judicial and democratic processes (e.g., supporting courts in applying the law).

Limited risk

AI systems with limited risk are subject to less stringent requirements but still must meet basic transparency and security standards. These are intended to ensure that users are informed about the capabilities and limitations of the AI system, allowing them to make well-informed decisions. In addition to the transparency requirement, there is also an obligation to label AI-generated or AI-manipulated content. Examples include:

- Chatbots and virtual assistants, which must be clearly identifiable as such.

- Synthetic content, such as AI-generated images, videos, or texts.

Low risk

AI systems classified as “low risk” are considered by the legislator to pose very little, or no, risk to the safety, health, or fundamental rights of individuals. As a result, these systems are not subject to specific legal requirements. However, the EU encourages voluntary adherence to guidelines when developing AI systems. Examples of systems in this risk category include:

- Predictive maintenance applications

- Spellcheck and grammar assistance

- Spam filters

- Translation services

- Recommendation algorithms for videos and music

Special consideration of general purpose AI

General Purpose AI (GPAI) refers to AI models that can be used for a wide range of applications and tasks, rather than being developed for a specific purpose or use case (e.g., ChatGPT). While GPAI models significantly benefit the workforce, they also pose potential risks to society and the economy.

Providers of GPAI models are subject to fundamental requirements, particularly regarding the technical documentation of the models. Additionally, GPAI models requiring very high computational power for training (>1025 FLOPS) are classified as GPAI models with “systemic risks”. Furthermore, a Code of Practice will be developed by the EU and various stakeholder groups (link to the draft GPAI Code of Practice, as of November 14, 2024). This Code of Practice is likely to form the basis for GPAI standards.

GPAI systems are fundamentally divided into two categories.

GPAI with systemic risks must meet the following requirements:

- Conduct model assessments

- Risk assessment and mitigation

- Documentation of all serious incidents and corrective actions, and reporting to the EU

- Security training (Red Teaming)

- Cybersecurity and physical protection measures

- Documentation and reporting of the model's estimated energy consumption

All GPAI must meet the following basic requirements:

- Up-to-date technical documentation

- Provision of information for downstream providers

- Implementation of policies to comply with EU copyright laws

- Detailed summaries of the content used for training

AI compliance: penalties for non-compliance

Failure to comply with the provisions of the EU AI Act may result in significant fines, up to €35 million or 7 % of annual turnover for violations of the prohibitions in Article 5, and up to €15 million or 3 % of annual turnover for other violations. Additionally, companies face significant reputational damage within society and their industry. With key milestones outlined in the EU AI Act timeline, businesses must prepare proactively and ensure compliance with the EU AI Act to avoid these risks.

What deadlines are relevant for companies?

February 2, 2025: Six months after the EU AI Act comes into force, companies must ensure they are not using AI systems with unacceptable risk. Such systems are prohibited and must be removed from the market. Non-compliance can result in hefty fines.

August 2, 2025: Twelve months after the entry into force of the EU AI Act, new General Purpose AI (GPAI) models developed or deployed after August 1, 2024, must meet all relevant requirements.

August 2, 2026: Twenty-four months after the EU AI Act comes into force, the requirements for high-risk AI systems deployed after August 1, 2024, will apply. Companies must ensure these systems meet all regulatory standards.

August 2, 2027: Thirty-six months after the EU AI Act comes into force, all existing GPAI models deployed before August 1, 2024, must fully comply with the new requirements.

For further deadlines, refer to the official EU AI Act website at EU AI Act Implementation Timeline.

Is Your Company Facing the Following Pain Points?

With new regulations like the EU AI Act, businesses are encountering uncertainties, as there are no predefined blueprints that can be universally applied to every company, especially when dealing with such novel technologies. Furthermore, the establishment of governance structures for managing AI must be carefully integrated into the existing process and infrastructure landscape to meet the company's specific needs.

Creating a risk assessment may initially appear as a significant hurdle, as many companies are not yet familiar with this type of risk evaluation. Moreover, businesses often lack the necessary internal resources and expertise to effectively implement the requirements of the EU AI Act in daily operations. With the already mentioned tight deadlines for the actual implementation of these far-reaching requirements, compliance with the EU AI Act becomes a formidable challenge.

How to Meet the First Deadline of the EU AI Act

By February 2, 2025, companies must ensure that no AI systems with unacceptable risks are used within their ecosystem. The following steps will help you meet this deadline:

Identifying and inventorying AI systems

The first step for companies is to gain an EU AI Act overview of how AI is being used and document it accordingly. This “inventorying” process can be done manually or with automated systems, depending on the company's needs. The inventory should encompass all use cases where AI applications are involved. In the initial phase, it is crucial to identify and discontinue any prohibited AI applications before the legal deadline of February 2, 2025.

Implementing compliance measures

Successfully implementing the EU AI Act requires comprehensive AI governance, supported by appropriate software tools for documentation, risk assessment, and monitoring of AI systems. The starting point is that the company must be enabled to classify AI systems and determine risk levels, such as “Unacceptable Risk” or “High Risk”.

In particular, high-risk applications are subject to a wide range of sometimes extensive requirements, including:

- Establishing a risk management system

- Creating documentation and technical records

- Ensuring data quality

- Ensuring transparency towards users

- Implementing human oversight

- Ensuring robustness and cybersecurity

- Ensuring transparency in AI usage

- Implementing quality management

The necessary compliance structures should incorporate the following key elements:

- Defining responsibilities

- Establishing processes for compliance assessment

- Implementing monitoring and documentation systems

Planning and training of employees

Proactive planning and comprehensive employee training are essential to ensure compliance with legal deadlines. Article 4 of the EU AI Act explicitly calls for the promotion of “AI Literacy”. Therefore, companies must ensure that their employees have the necessary knowledge and skills to use and monitor AI systems safely and responsibly. Additionally, appropriate governance structures, responsibilities, risk management processes, and quality management systems are needed. These should align with the phased implementation timeline of the EU AI Act.

Outlook on the Next Deadline

If your company does not use AI systems with systemic risk, the next key deadline is August 2, 2026. By this date, all requirements for high-risk AI systems must be fully met. These regulations are particularly strict and comprehensive. MHP is already assisting several companies with the implementation of high-risk requirements. Even though the deadline may seem far off, numerous measures need to be implemented. The sooner you start, the better prepared you will be!

How MHP Actively Supports You in Implementing the EU AI Act

With MHP’s experts, you can approach the implementation in a structured and efficient manner. We offer holistic consulting with an end-to-end concept, ranging from the inventory of your AI systems to the development of a risk management strategy and the implementation of necessary compliance measures. In addition, MHP provides comprehensive technical consulting and helps you implement tailored solutions for your business. Furthermore, we assist in creating and implementing the EU AI Act's requirements for training concepts, ensuring your employees are well-prepared for the use of AI, and advise you on the introduction of new roles.

Conclusion: Implement the EU AI Act Efficiently with MHP’s Expertise

The EU AI Act presents significant new challenges for businesses, as extensive adjustments in processes and technologies are required. The strict deadlines and comprehensive regulations demand early and careful planning. According to a Bitkom study (2024), 69 % of surveyed companies indicate they need support in dealing with the AI regulation.

MHP has already assisted numerous companies in successfully implementing the EU AI Act and offers practical support throughout the entire process. With our extensive experience in compliance implementation, we know what steps are necessary to meet regulatory requirements in a timely and legally compliant manner. Rely on MHP as your partner for Trustworthy AI, and implement processes that provide security and innovation in the field of Artificial Intelligence.

Disclaimer:

The information provided about the AI Act is for general informational purposes only and does not constitute legal advice. Despite the utmost care taken in creating this document, it does not claim to be exhaustive or accurate.

FAQ

The EU AI Act applies to all AI systems developed, provided, or used within the EU. The regulation categorizes these systems into unacceptable, high, limited, and minimal risk, as well as general-purpose AI systems (GPAI). GPAI models and high-risk AI systems, such as those in the fields of healthcare, safety, and law enforcement, are subject to particularly strict regulations.

The first steps to comply with the EU AI Act include identifying and assessing the risks of the AI systems in use, as well as eliminating prohibited AI applications with unacceptable risks. Companies should then implement necessary compliance measures covering all relevant functions and the entire lifecycle of their AI systems. Establishing transparent documentation and monitoring processes is essential to ensure regulatory compliance. Early planning and staff training are also crucial to meet legal deadlines.

MHP supports companies in establishing governance structures required to comprehensively meet the EU AI Act’s requirements. MHP provides guidance on key areas such as inventorying AI systems, risk assessment, training concepts, and implementing necessary compliance measures. By offering tailored solutions that integrate seamlessly into existing corporate processes, MHP creates structural frameworks that enable companies to efficiently and timely comply with legal requirements.